| Summary |

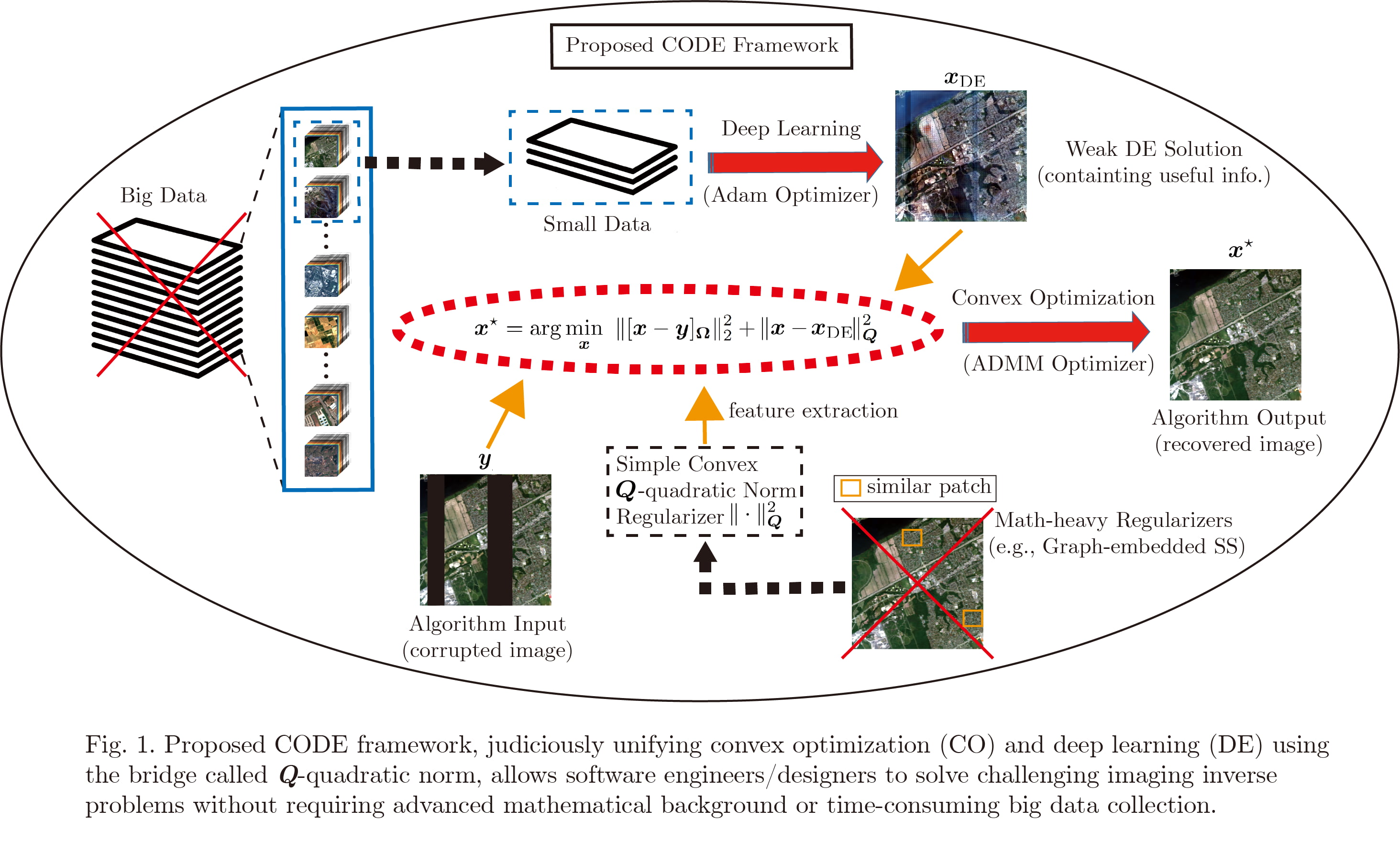

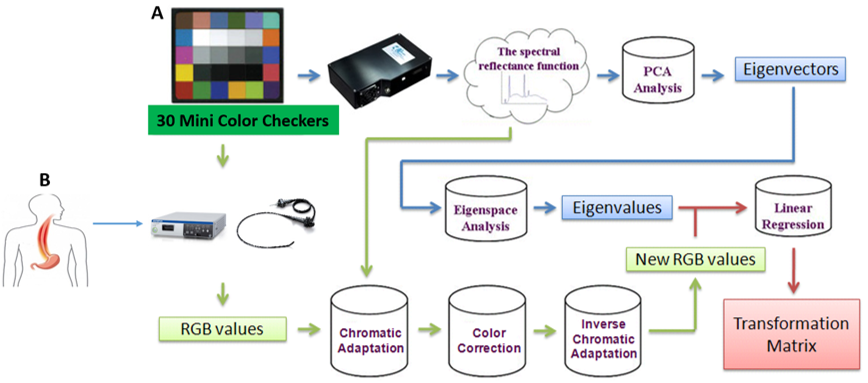

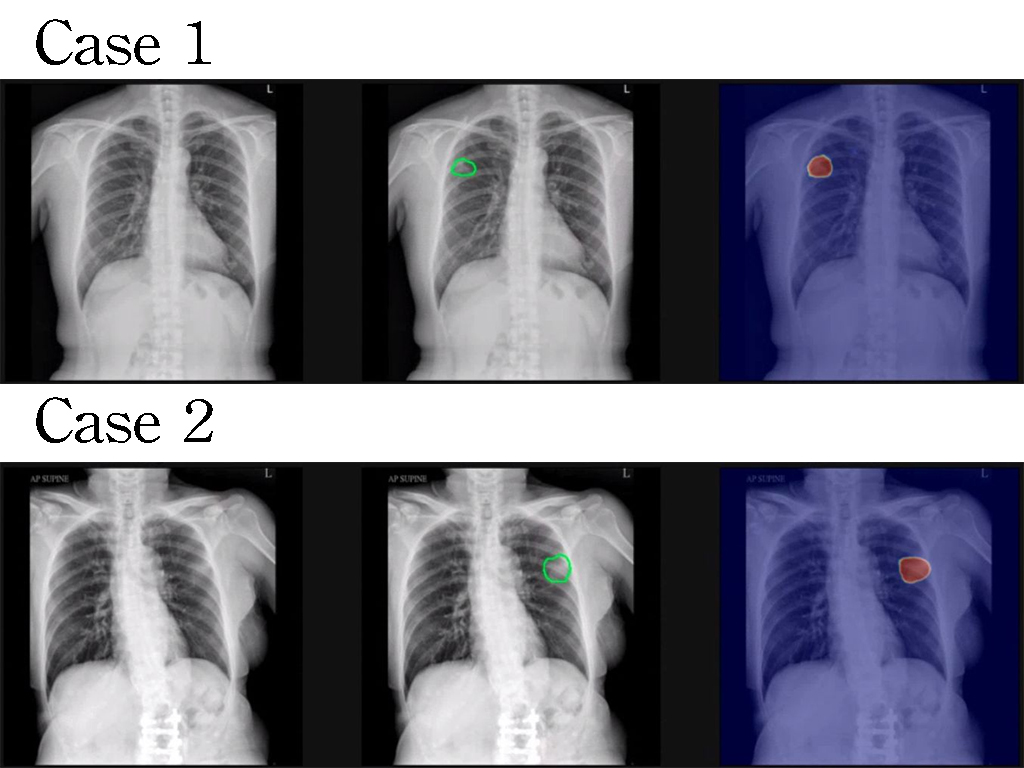

This research develops innovative hyperspectral satellite imagery sensing, fusion, and secure transmission technologies. By combining the advantages of multispectral and hyperspectral images, it enhances both spatial and spectral resolution. It also integrates deep learning and deepfake image recognition mechanisms with the technology published in top-tier journals like IEEE TGRS. This technology not only improves image transmission efficiency but also ensures image authenticity. |

| Scientific Breakthrough |

We have published papers in top-tier journals, such as IEEE TGRS, demonstrating breakthroughs in satellite image compression sensing and fusion technologies. With an impact factor 8.2, TGRS highlights our research's international leadership. Our real-time compression sensing technology requires only integer matrix multiplication, improving efficiency by over 20%, suitable for microsatellites, achieving FPS>30, and perfect restoration under high noise conditions, making high-quality output. |

| Industrial Applicability |

The global satellite remote sensing market is projected to grow from $12 billion in 2020 to $20 billion by 2025. The hyperspectral and multispectral image fusion technology developed in this research is suitable for environmental monitoring and disaster response, providing high spatiotemporal resolution remote sensing images. The technology’s applications include government agencies and commercial companies, creating economic benefits through innovative technology and helping many industries. |