| Technical Name | Image-based Parking Space Detection | ||

|---|---|---|---|

| Project Operator | National Chung Cheng University | ||

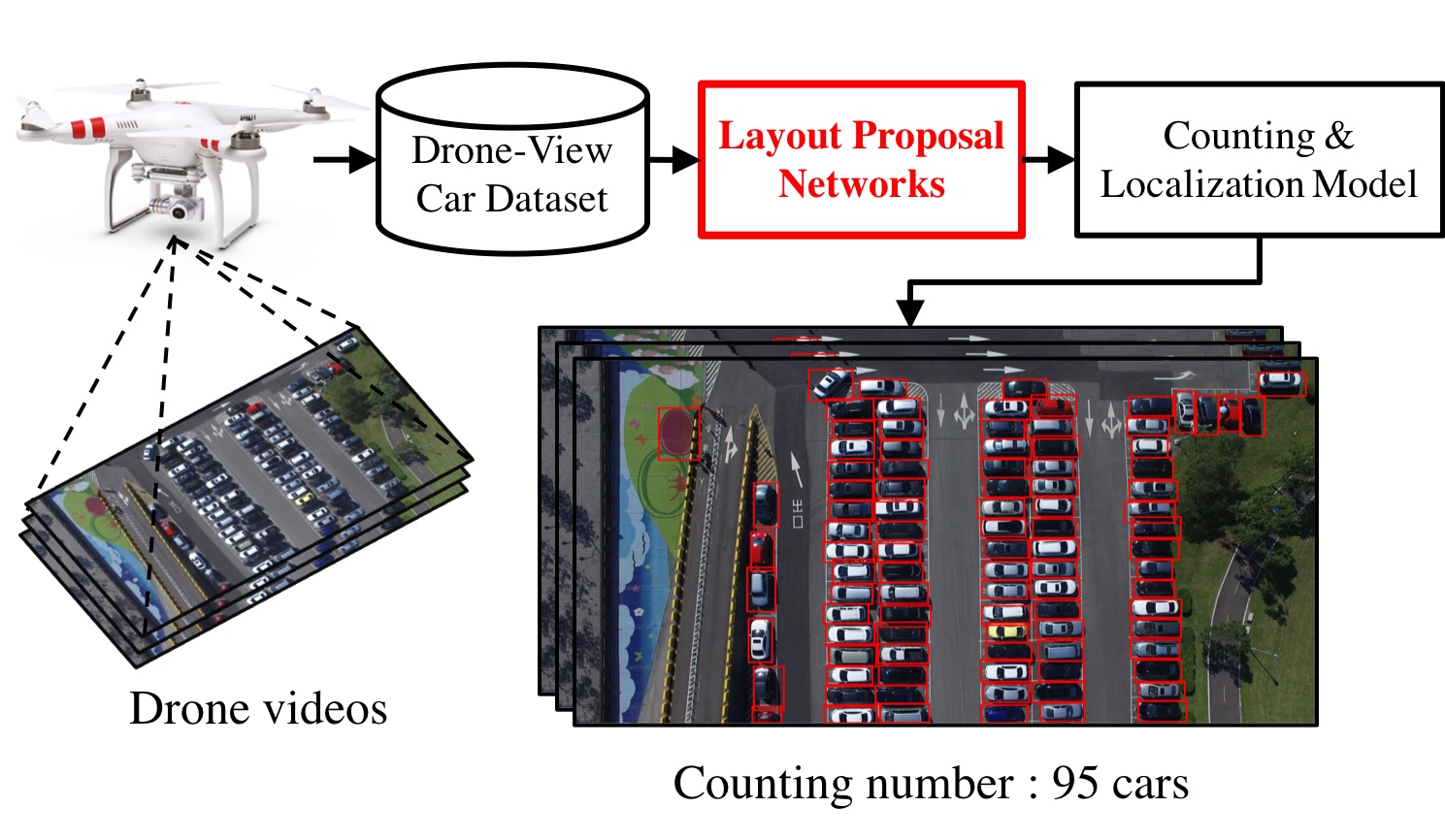

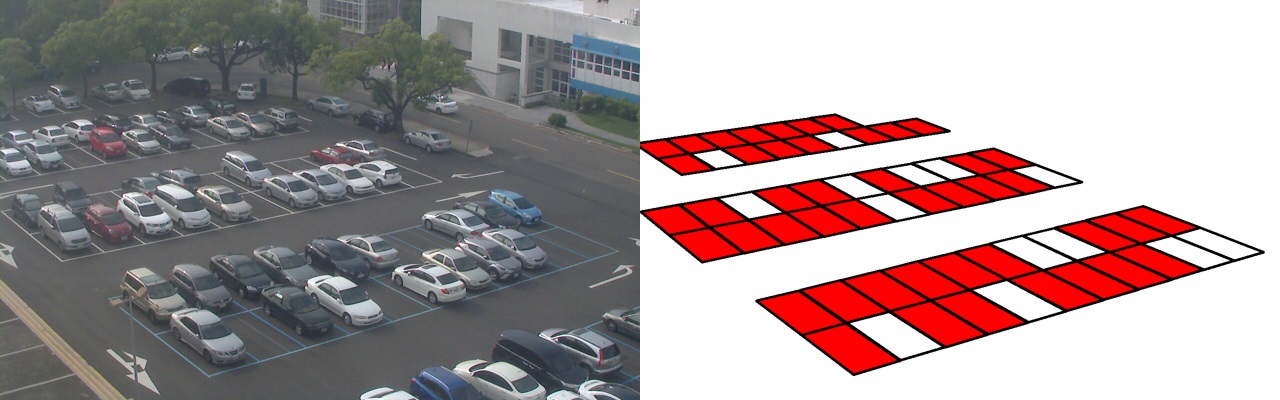

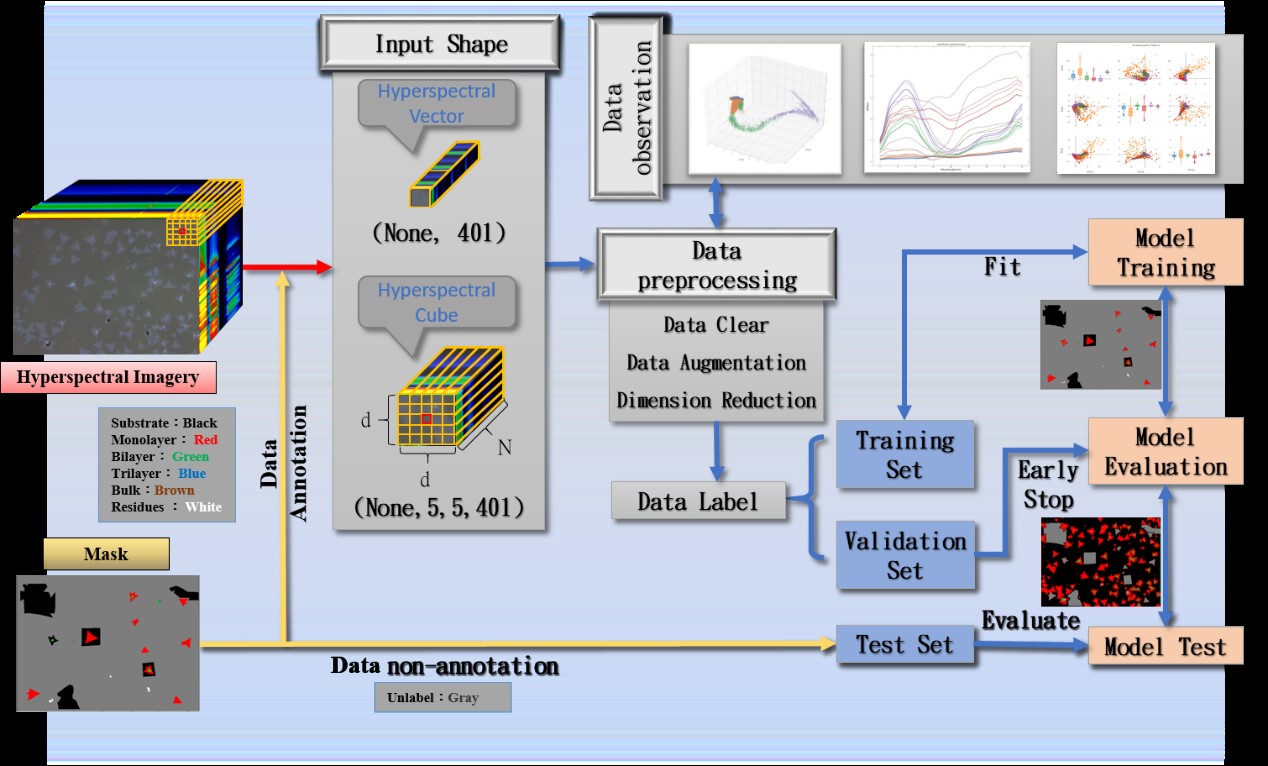

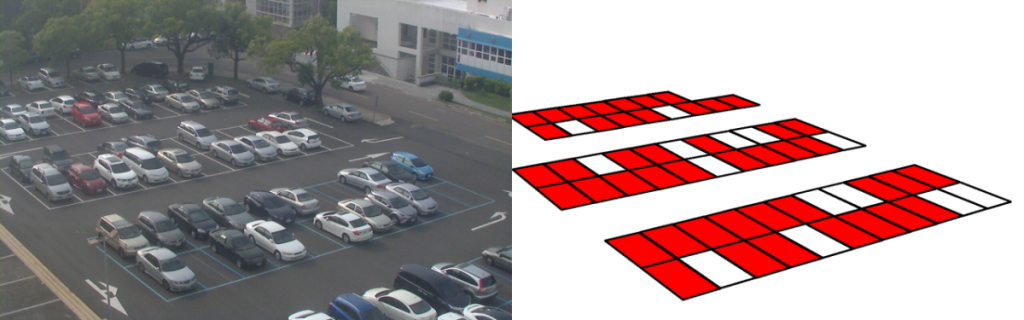

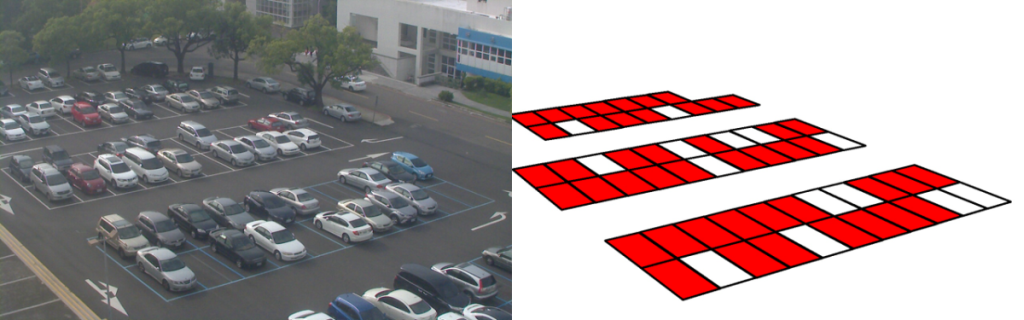

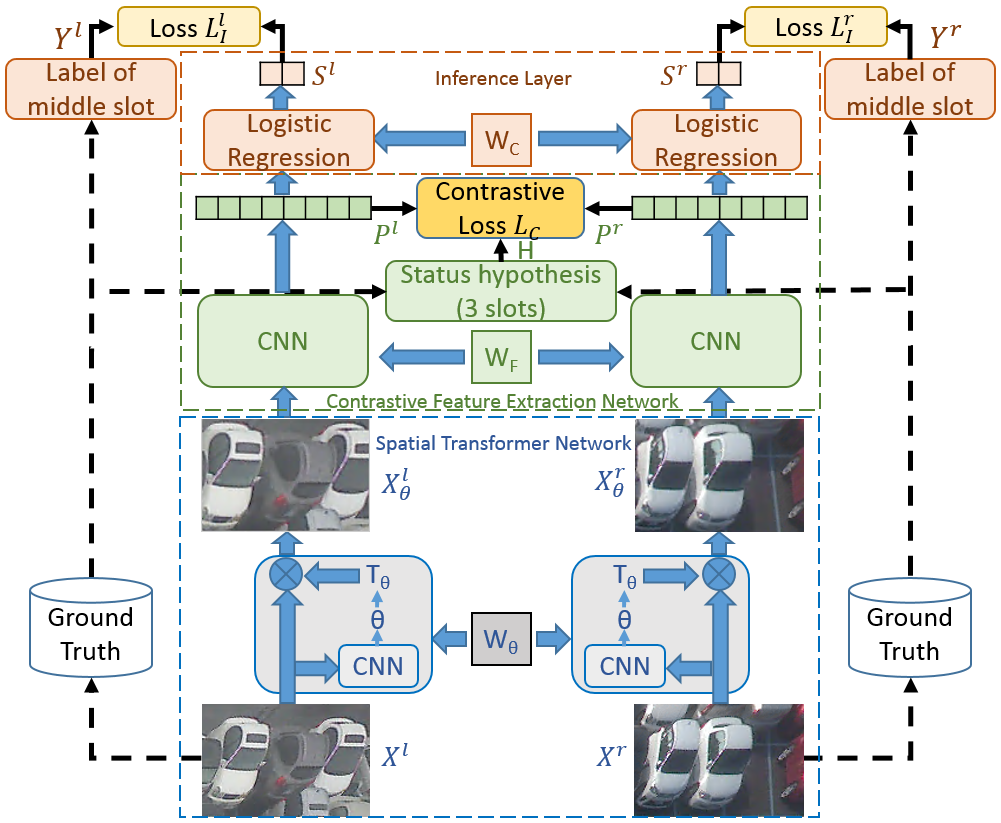

| Summary | Previous methods found on camera geometry and projection matrix to select space image region for status classification. By utilizing suitable hand-crafted features, outdoor lighting variation and perspective distortion could be well handled. However, if also considering parking displacement, non-unified car size, and inter-object occlusion, we find the problem becomes more troublesome. To solve these issues in a systematic way, we proposed to design a deep convolutional network to overcome these challenges. |

||

| Scientific Breakthrough | In this technology, we use three modules to achieve a robust system. |

||

| Industrial Applicability | 當前市面上,停車位管理大致以機械式與感測式的系統為主,這樣的系統在過去已經廣泛地被討論與應用,並得到相當良好且穩定的效果。然而,這樣的系統主要以感測器等硬體裝置為主,功能性上較不具備擴充的彈性,同時,系統維護上的成本也無法有效被降低,這也促使部分專家學者思考另外可行的改進方案。近年來,監控攝影機的使用越來越普及,相關的應用性逐漸地受到注目,因此已有不少研究將視訊監控系統應用於停車場管理上,希望透過軟體與演算法的開發,利用影像分析,自動偵測停車空位,以提供停車導引服務。 |

||

other people also saw