| Technical Name |

3D Object Referring and Grasp Detection Networks |

| Project Operator |

National Taiwan University |

| Project Host |

徐宏民 |

| Summary |

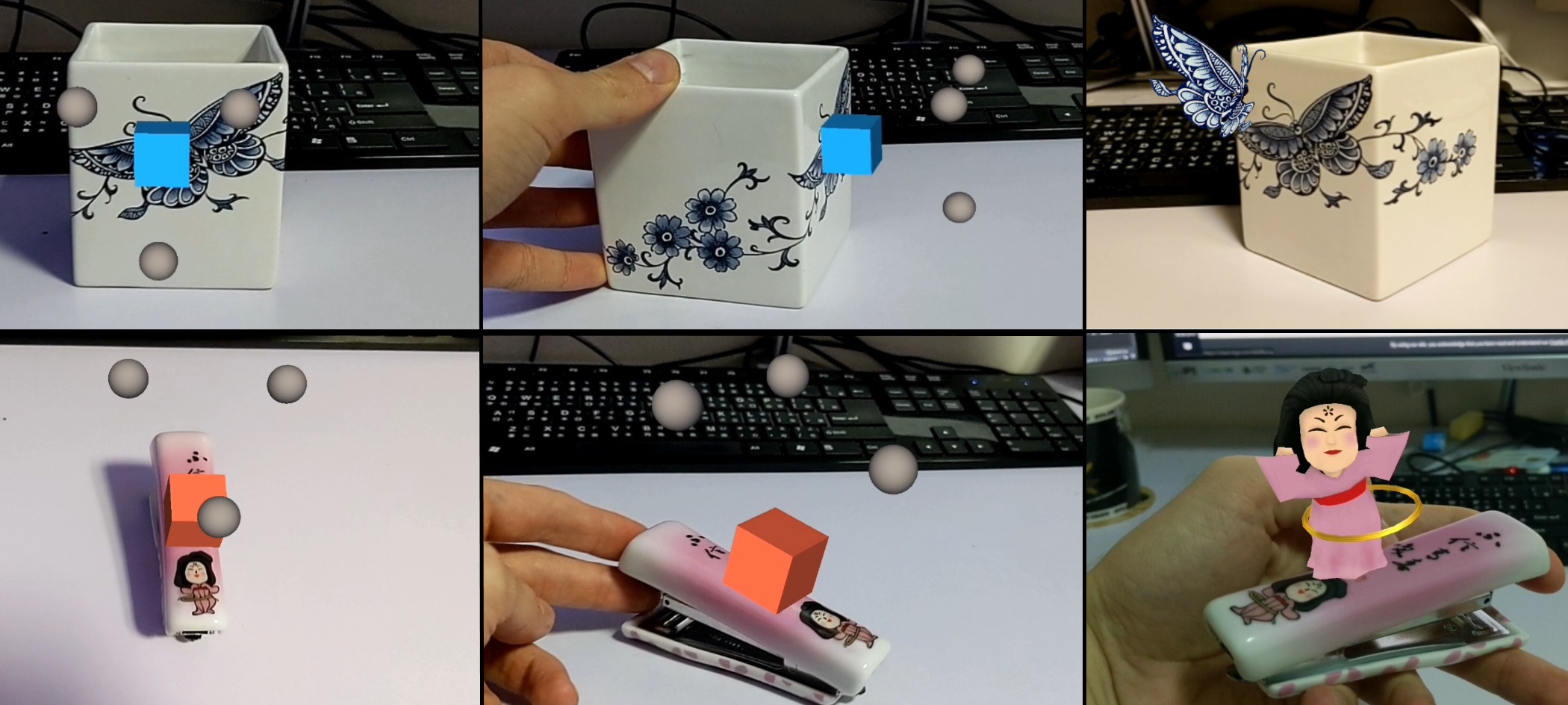

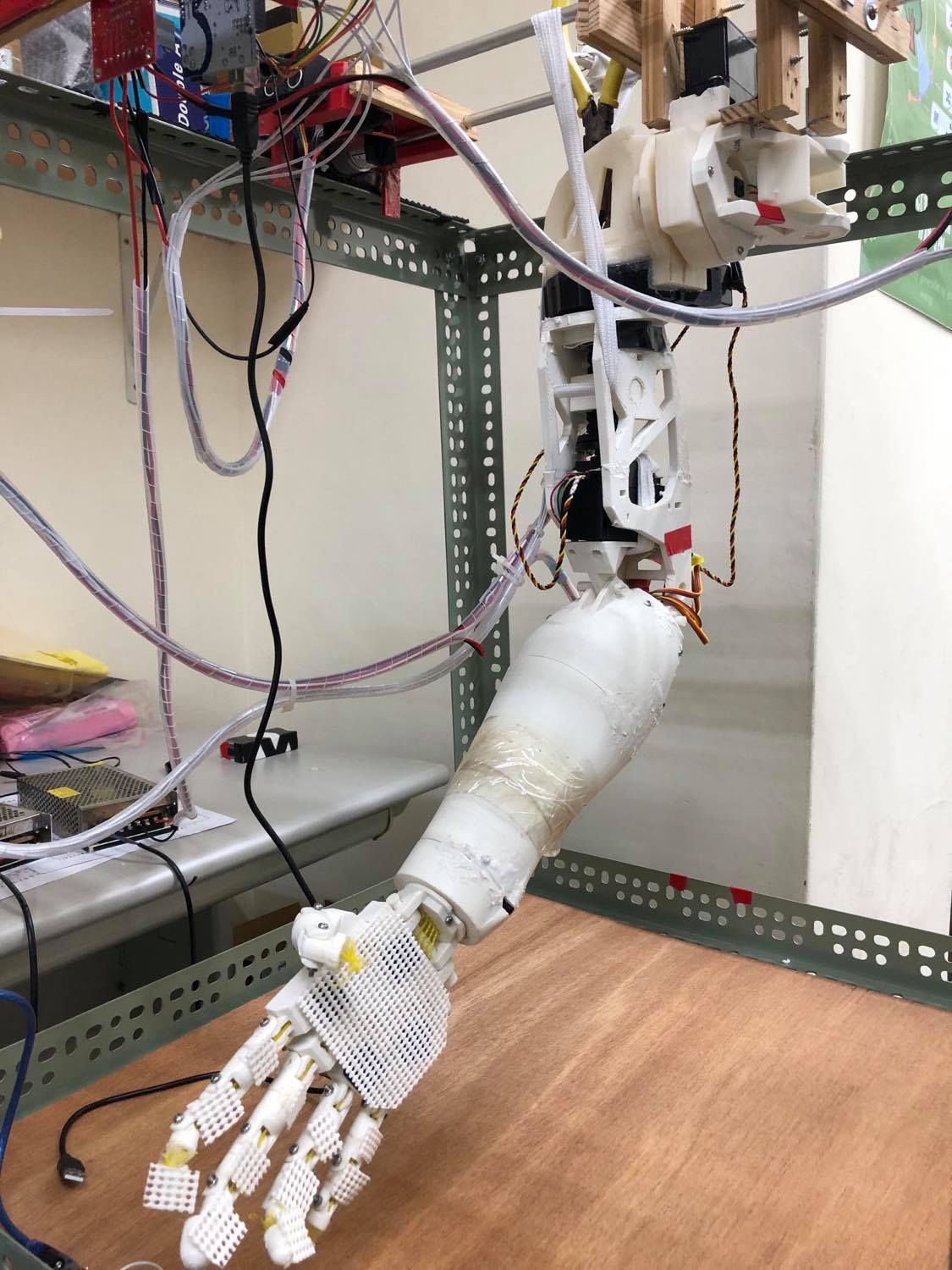

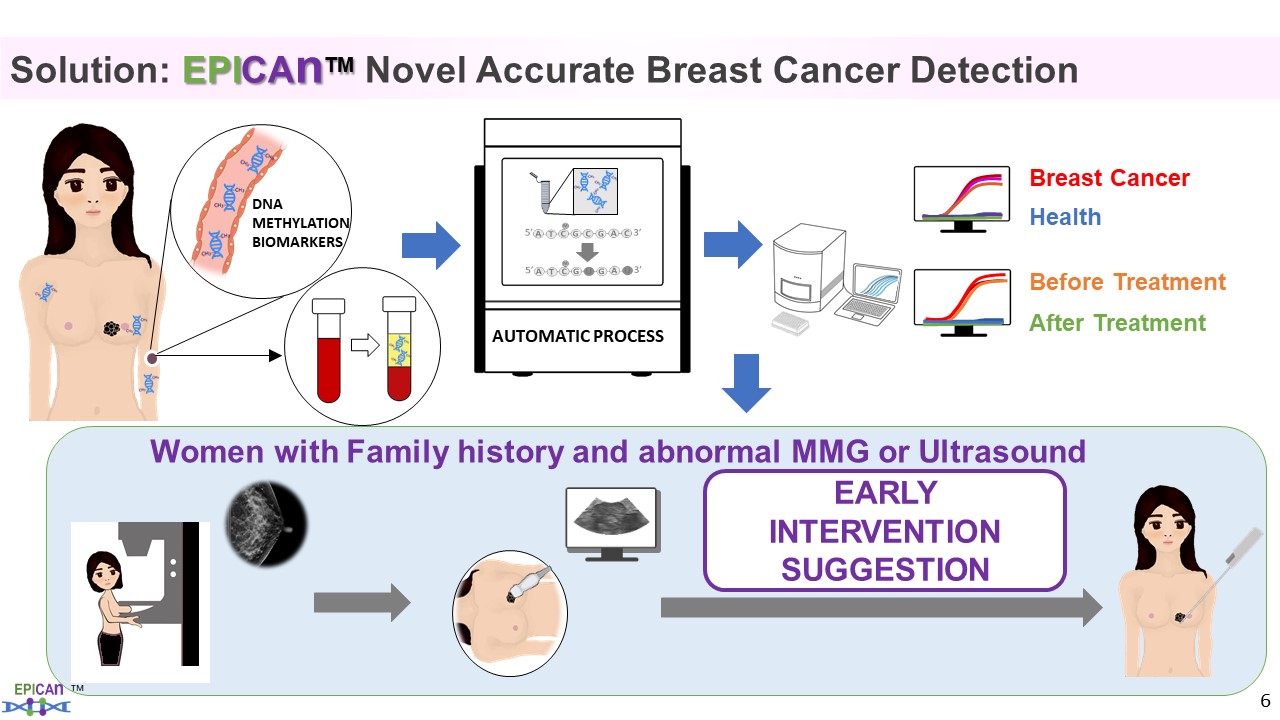

"It is expected that human will work with robots in the coming years. However, it is still unknown how both can collaborate together. Meanwhile, it is still very time-consuming to deploy intelligent robots in production lines. For this work, we investigate deep comprehension for 3D (point cloud)text (voice) signals to enable novel human-robotic object referring for robotic arms.

For the task |

| Scientific Breakthrough |

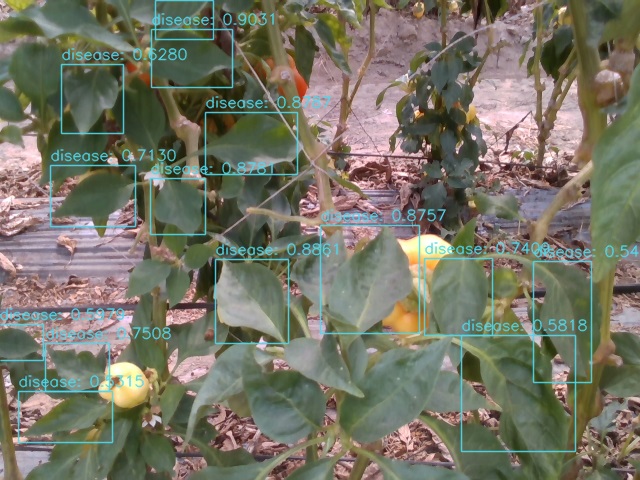

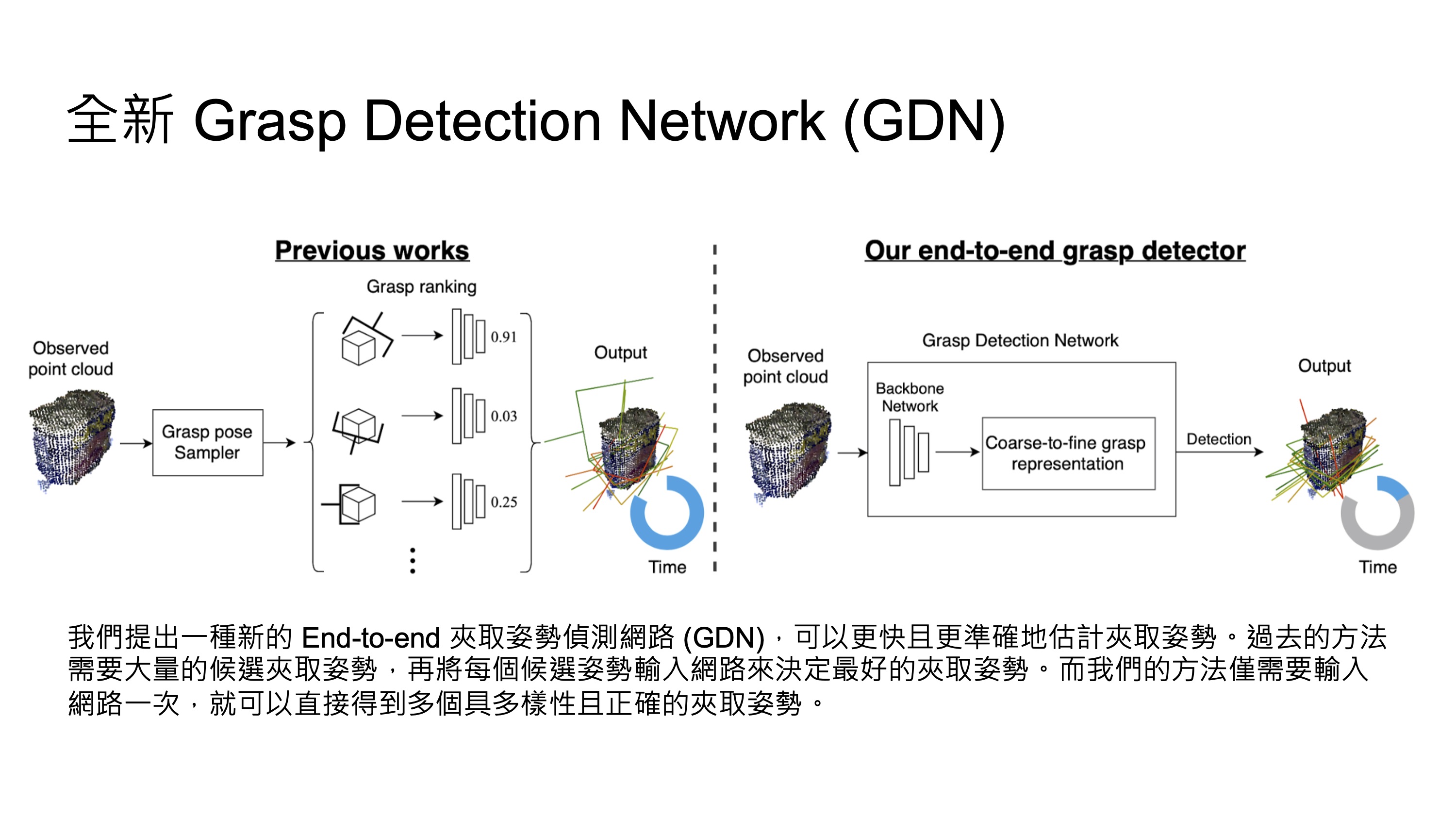

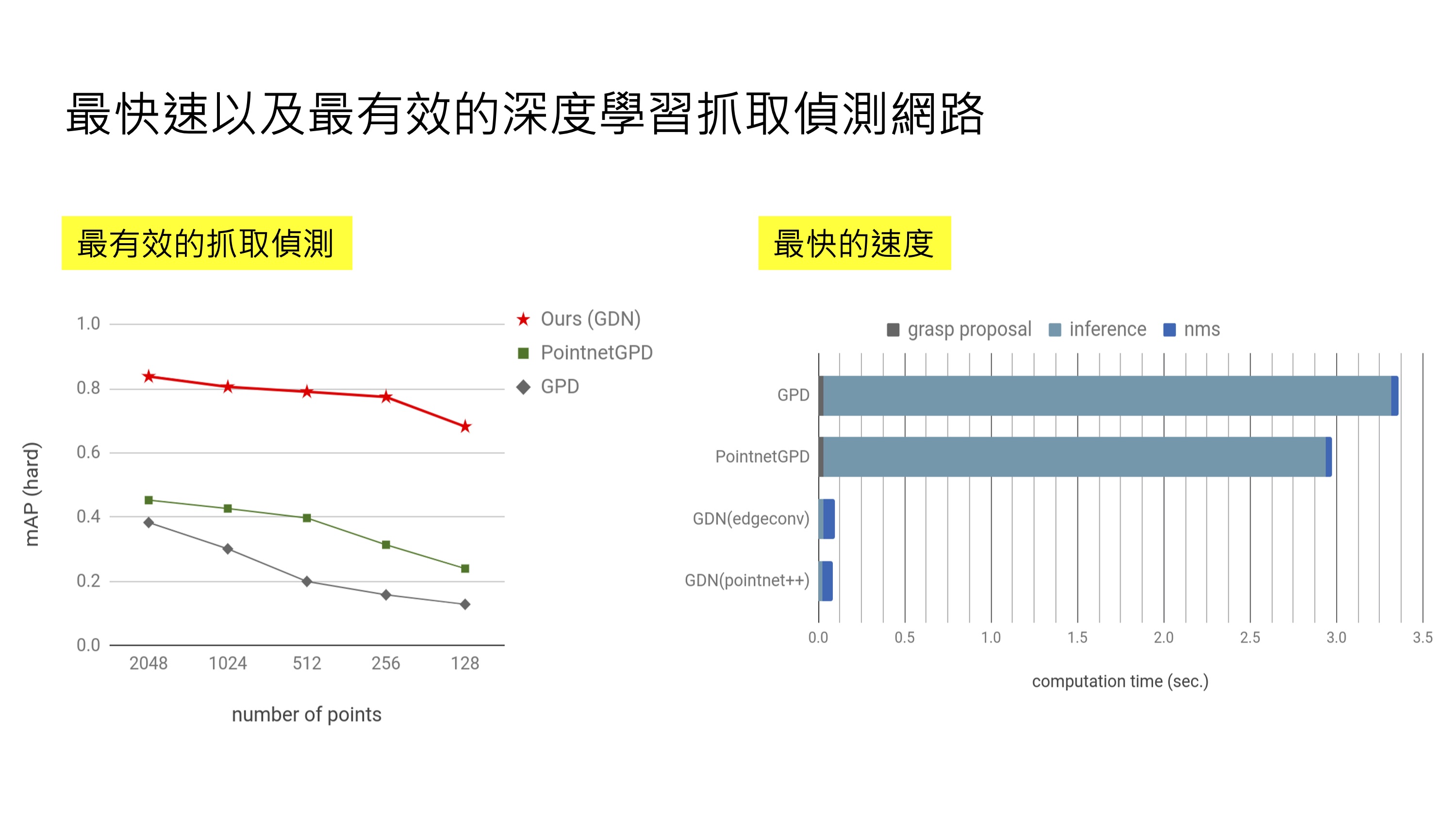

Advancing critical gasp detection for robotic arms, the proposed GDN has the best accuracy in grasping success rate, is the most robust for sparse point clouds,yields the least computation (30 folds against the prior methods). We argue text-based object referring for humanrobotsdevise novel multimodal neural networks taking both text (voice)3D point clouds. Pioneer |

| Industrial Applicability |

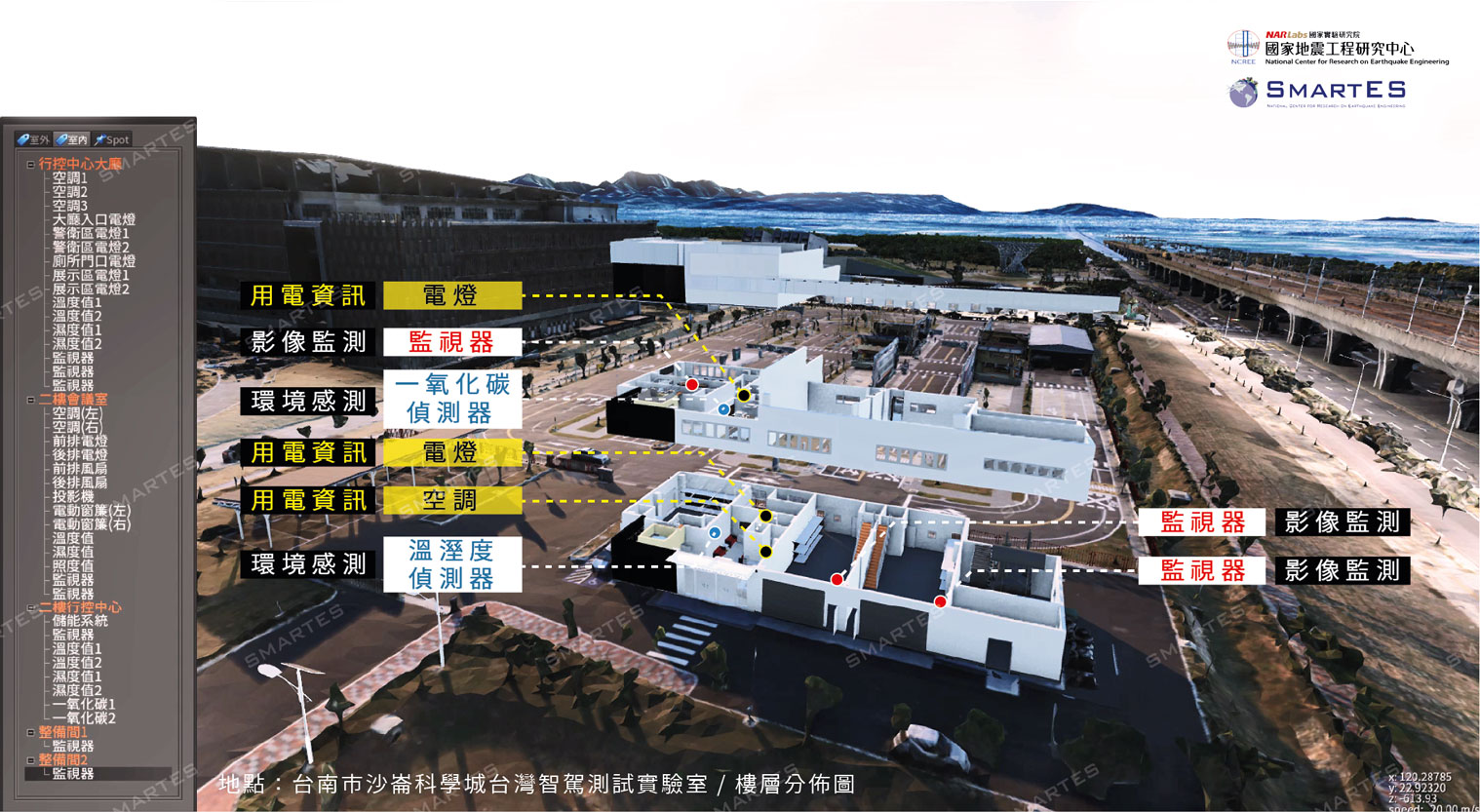

The proposed Grasp Detection Networks (GDN) can huge reduce the deployment cost (timehuman) for industry production lines. The proposed language-based 3D object referring entails soon-to-happen human-robot interactions. Meanwhile, the frameworks are all in end-to-end deep learning manners augmented with few-shot learning. It will facilitate the smooth deployment of advanced deep learning solu |

| Matching Needs |

Our solutions provide 3D vision for accurate 6DoF robotic arm grasping under cluttered scenes with low cost 3D sensors. We provide the most efficient and accurate grasp detection for unseen objects. We further consider few-shot learning for 3D vision for hugely reducing training time and data. |

| Keyword |

robotic arm grasping grasp detection point cloud 3D vision 6DoF deep learning object referring few-shot learning cross-domain learning |