| Technical Name | Neural Network Design, Acceleration and Deployment based on HarDNet - A Low Memory Traffic Network | ||

|---|---|---|---|

| Project Operator | National Tsing Hua University | ||

| Project Host | 林永隆 | ||

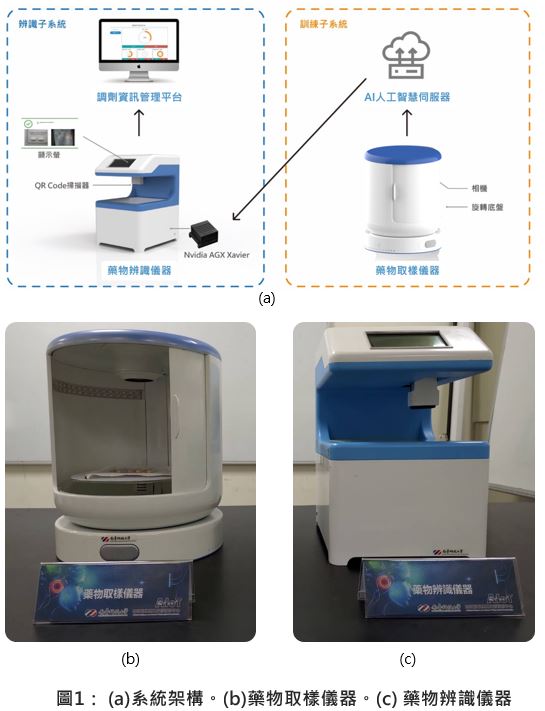

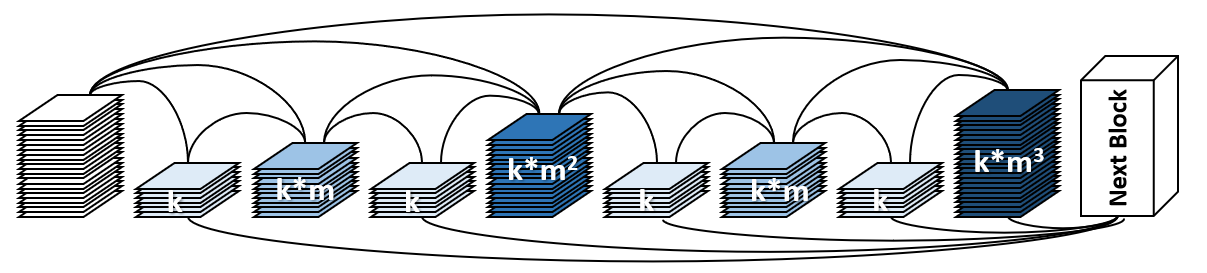

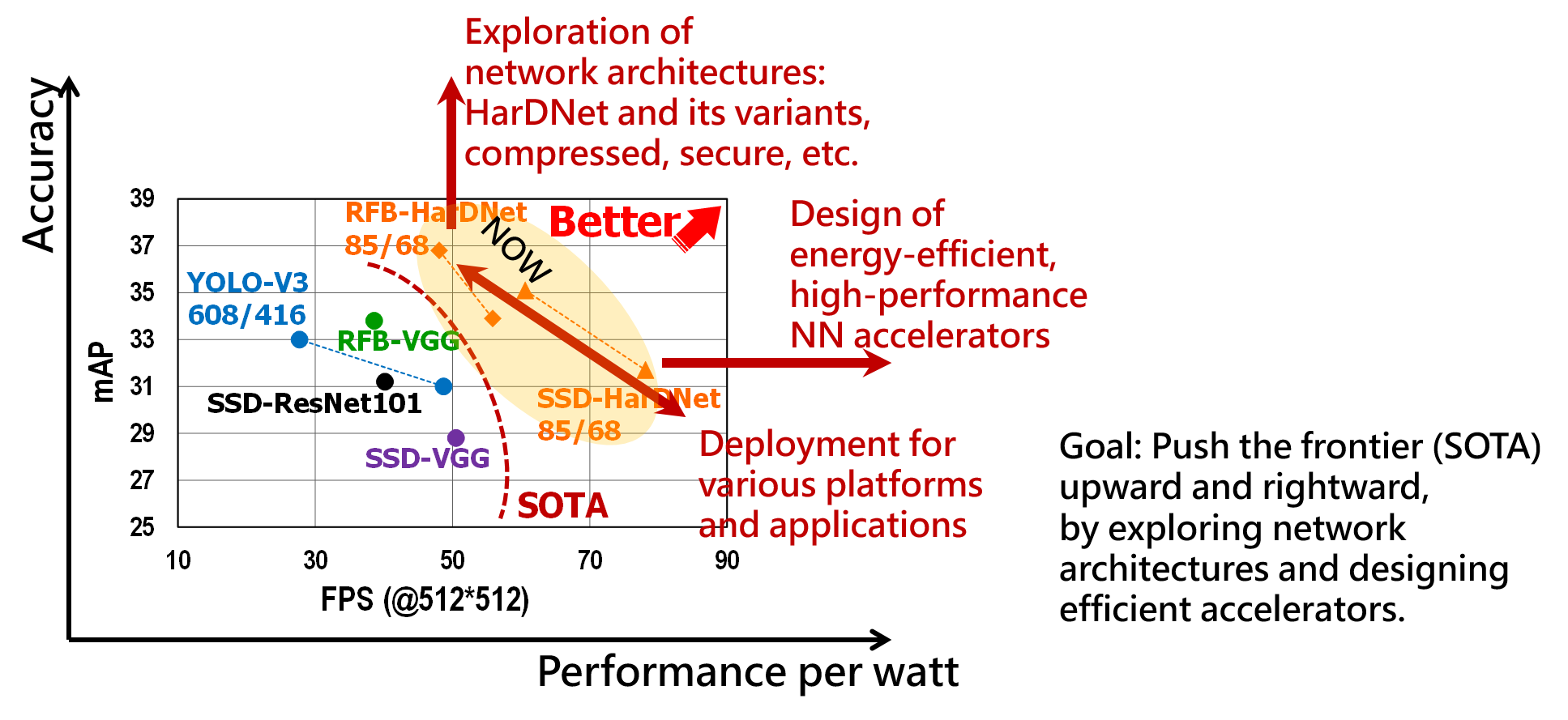

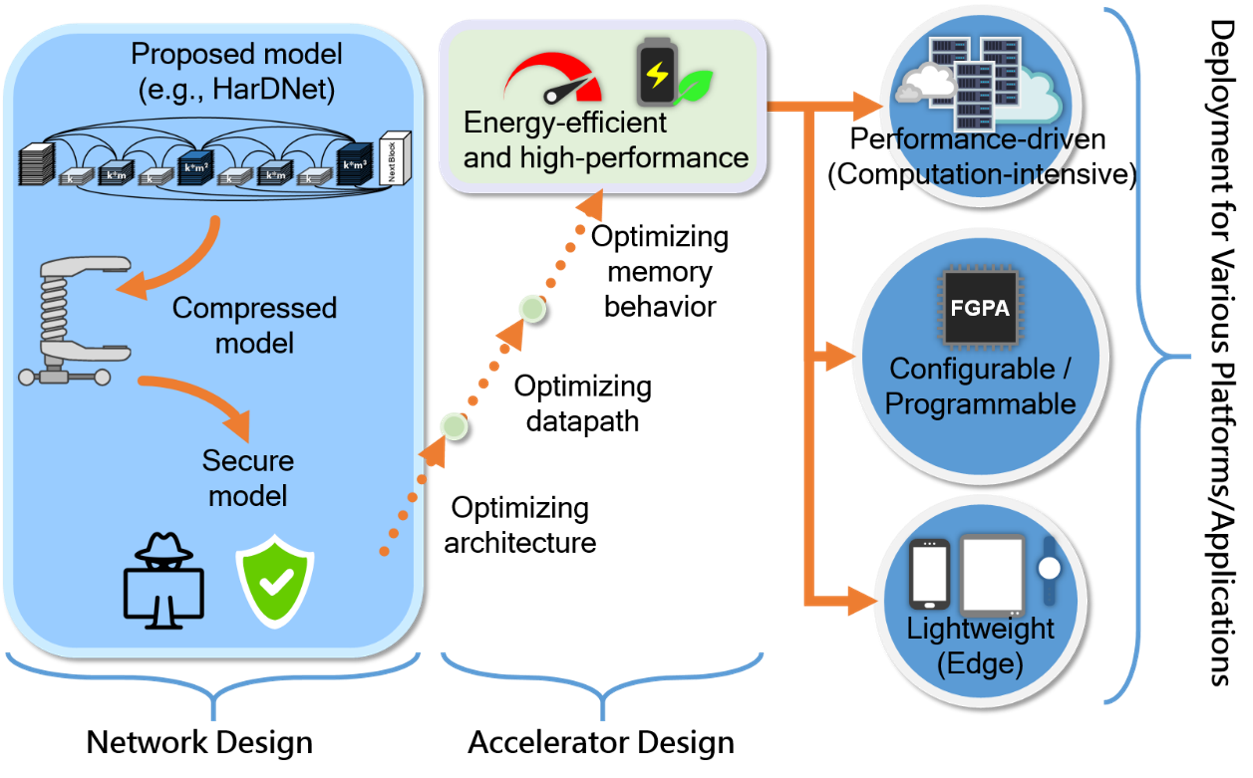

| Summary | 本團隊將基於已發表在International Conference on Computer Vision (ICCV) 2019的神經網路骨幹架構HarDNet,做多項的技術展示,包括: |

||

| Scientific Breakthrough | Being performed on various computing platforms such as GPU, FPGA, and AI edge device, HarDNet can consistently achieve highly competitive performance in terms of speed and accuracy. Especially, for the application of real-time semantic segmentation, HarDNet is ranked first around the world and has been recognized as "state of the art" (SOTA). Not only have we already deployed HarDNet on various platforms with different power budgets, but also we have been working on applying HarDNet and its variants on a variety of computer vision tasks besides those already done. |

||

| Industrial Applicability | 1. 矽谷智慧語音晶片大廠採用本團隊開發之RNN加速方案,其高階AI語音晶片已於2020下線,該晶片預估產值上看億元美金。 |

||

| Keyword | HarDNet (Harmonic DenseNet) Network Architecture Hardware Accelerator Edge AI Deployment Model Compression Security of Neural Networks Approximate Computing | ||

- patty@cs.nthu.edu.tw

other people also saw